Weekly App Install: n8n

Ok I know it hasn't been a week, it's barely been 24 hours. But after 2 weeks of being relatively unsuccessful (I still consider experiments a success even when they fail to work properly, but it's still annoying) I needed a quick win and this morning I got one and wanted to blog it.

I'll be the first to admit my ability to program is incredibly limited. I can parse some PHP and write some very basic code but it's not a strong skill. I can remember when we were first building the Domain of One's Own platform at UMW, Martha Burtis and I would use a site called ItDuzzIt (bought out and closed by Intuit apparently) which was a very flexible sort of "If this then that" with a drag and drop interface. I believe we used it mostly for feeding in CSV files and parsing the data to do a variety of things. These days there's Zapier which is pretty nice and I'm a regular user of it. But Zapier can start to get expensive quite fast if you have a large spreadsheet of data you need to do things with as they calculate usage by the number of tasks and if you're doing more than one thing for each spreadsheet row each of those tasks will count towards your quota. A specific use case for this in the past for me has been that our schools would provide a spreadsheet of accounts they want to remove from their server and it's typically in the form of email addresses. I would use the WHMCS API to cross check those emails for active hosting accounts or domains and then run actions to suspend or terminate them and update fields in the database for historical notes.

So I wondered if there was anything self-hosted that might get at this same idea of making easy connector workflows and came across n8n. In many ways it reminds me a bit of the old Yahoo Pipes with lots of integrations already baked in for third party apps. Best of all it's free and open source so let's see what it might take to run it.

This is another Node.js app, but this time there's decent information on running it as a Docker container so I figured we'd give that a go.

docker run -it --rm \

--name n8n \

-p 5678:5678 \

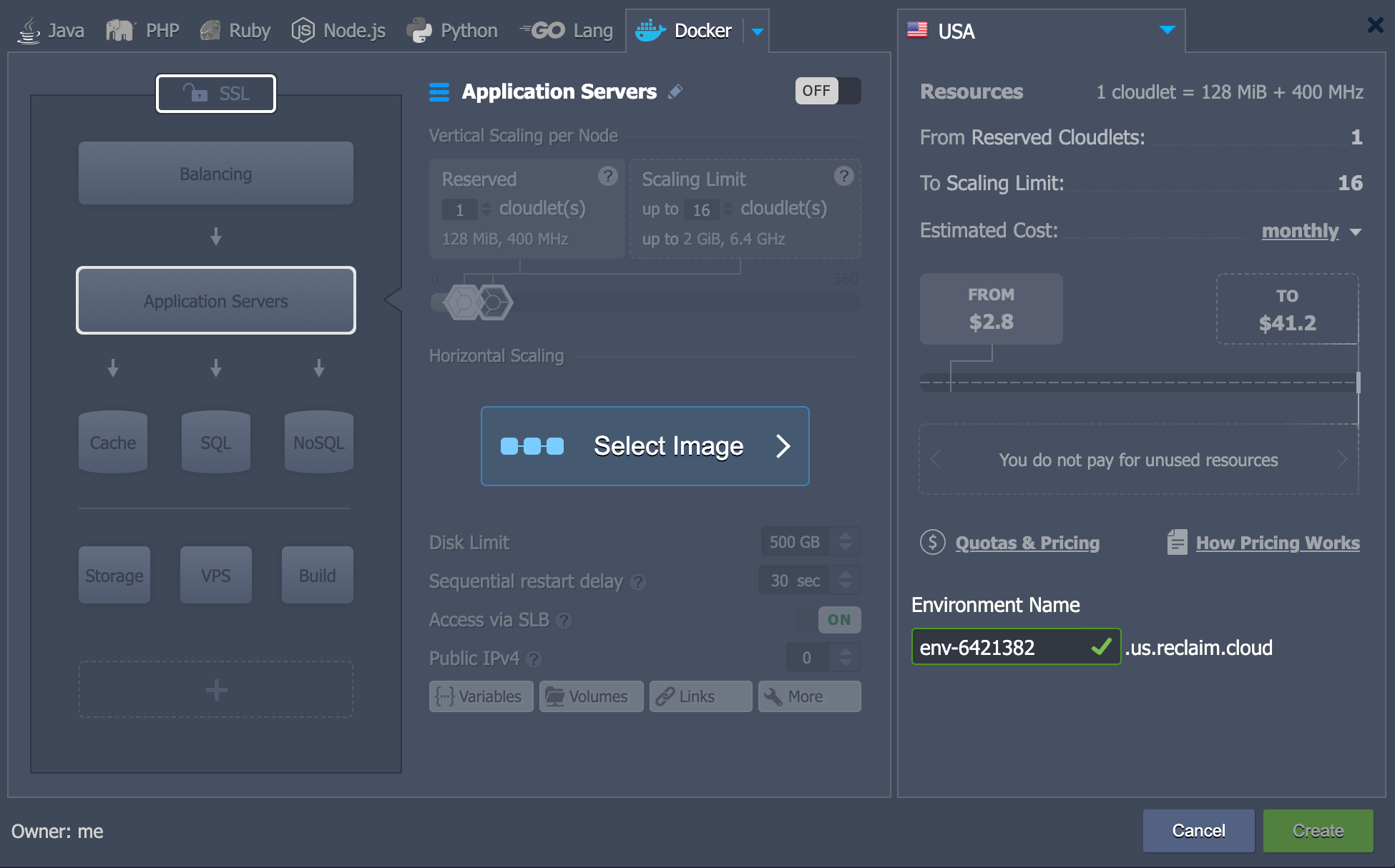

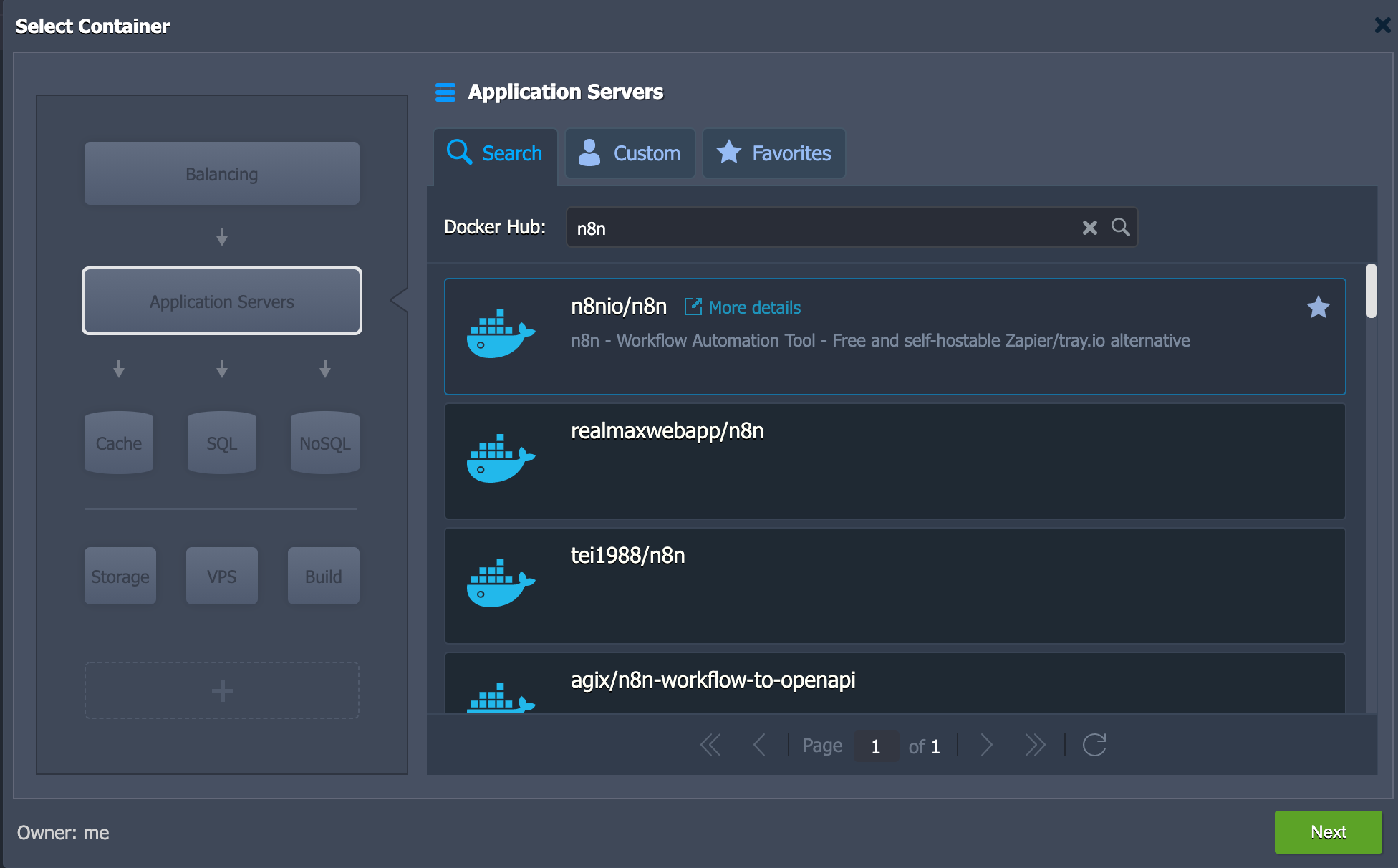

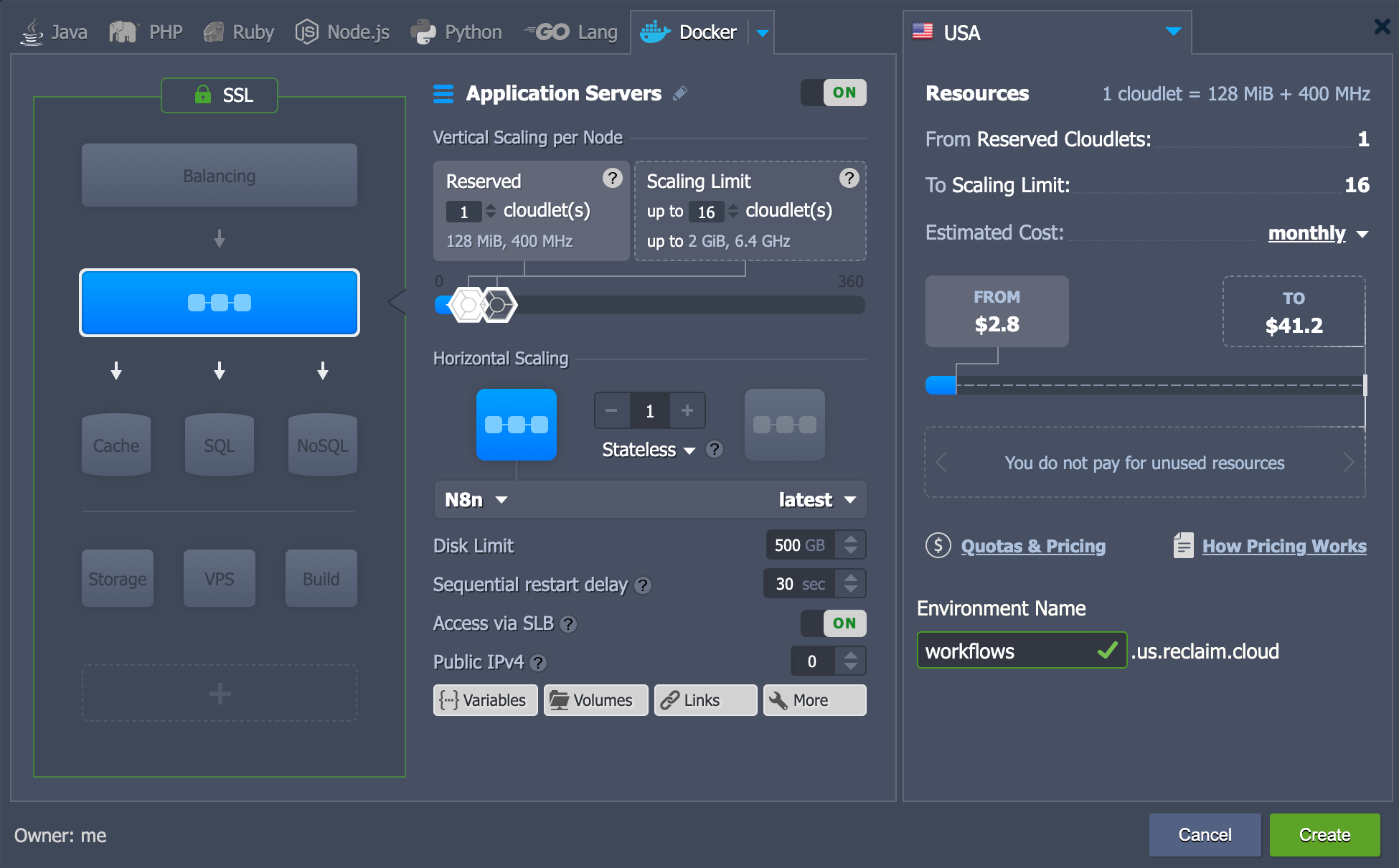

n8nio/n8nSince this is a single container we should be able to pull up the image in Reclaim Cloud directly rather than creating a full Docker Engine container.

So basically here I'm searching for the image which searches against Dockerhub and I've also enabled SSL at the top of the stack and gave it an environment name workflows.us.reclaim.cloud.

One cool thing about the way Reclaim Cloud handles Docker images like this is that it will look for any ports that are being exposed by the container and proxy them to the shared load balancer. So even though the app runs on port 5678 the cloud environment should be able to load it directly on port 443 (basically by loading the https version of the URL) cleanly.

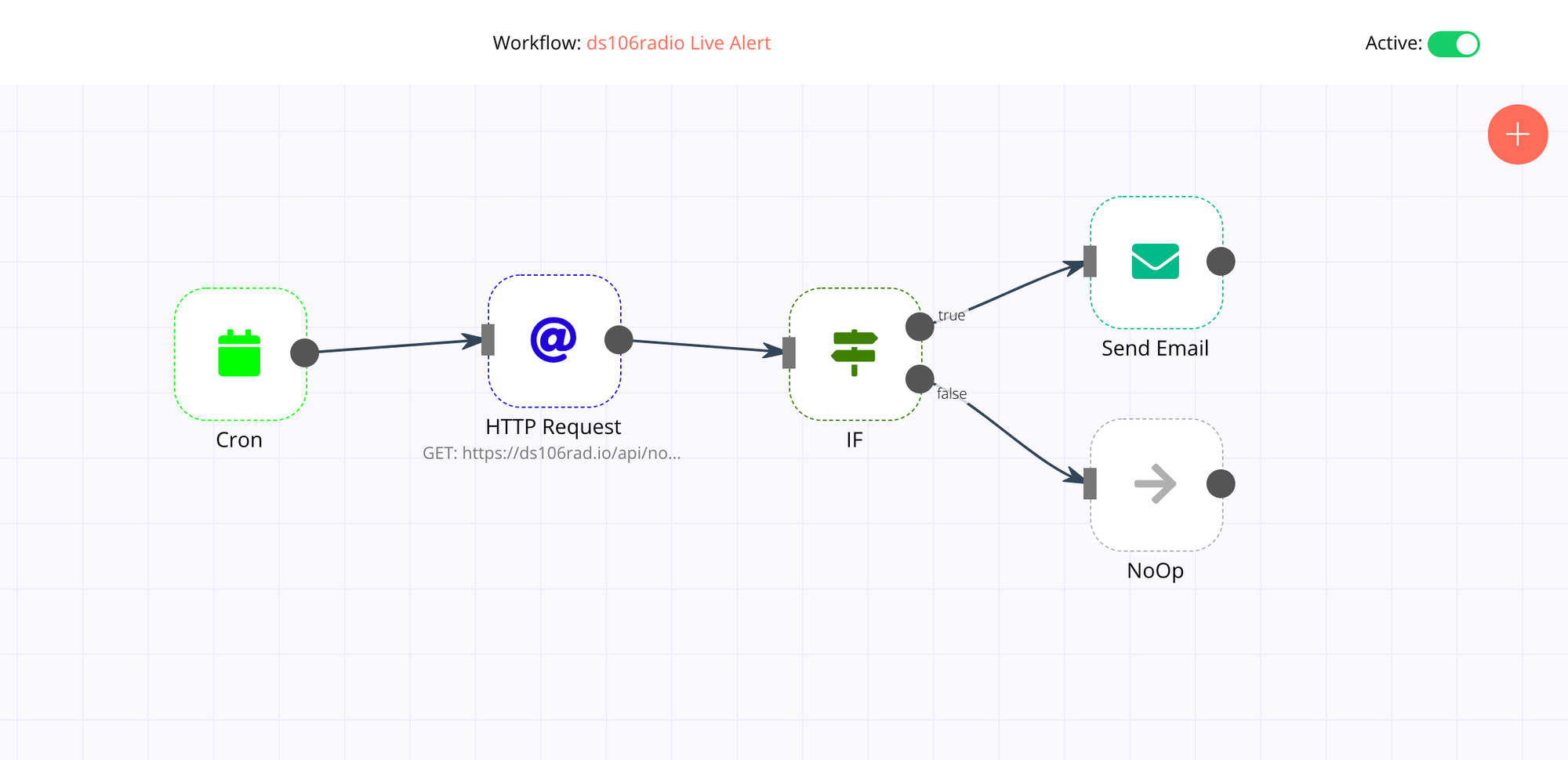

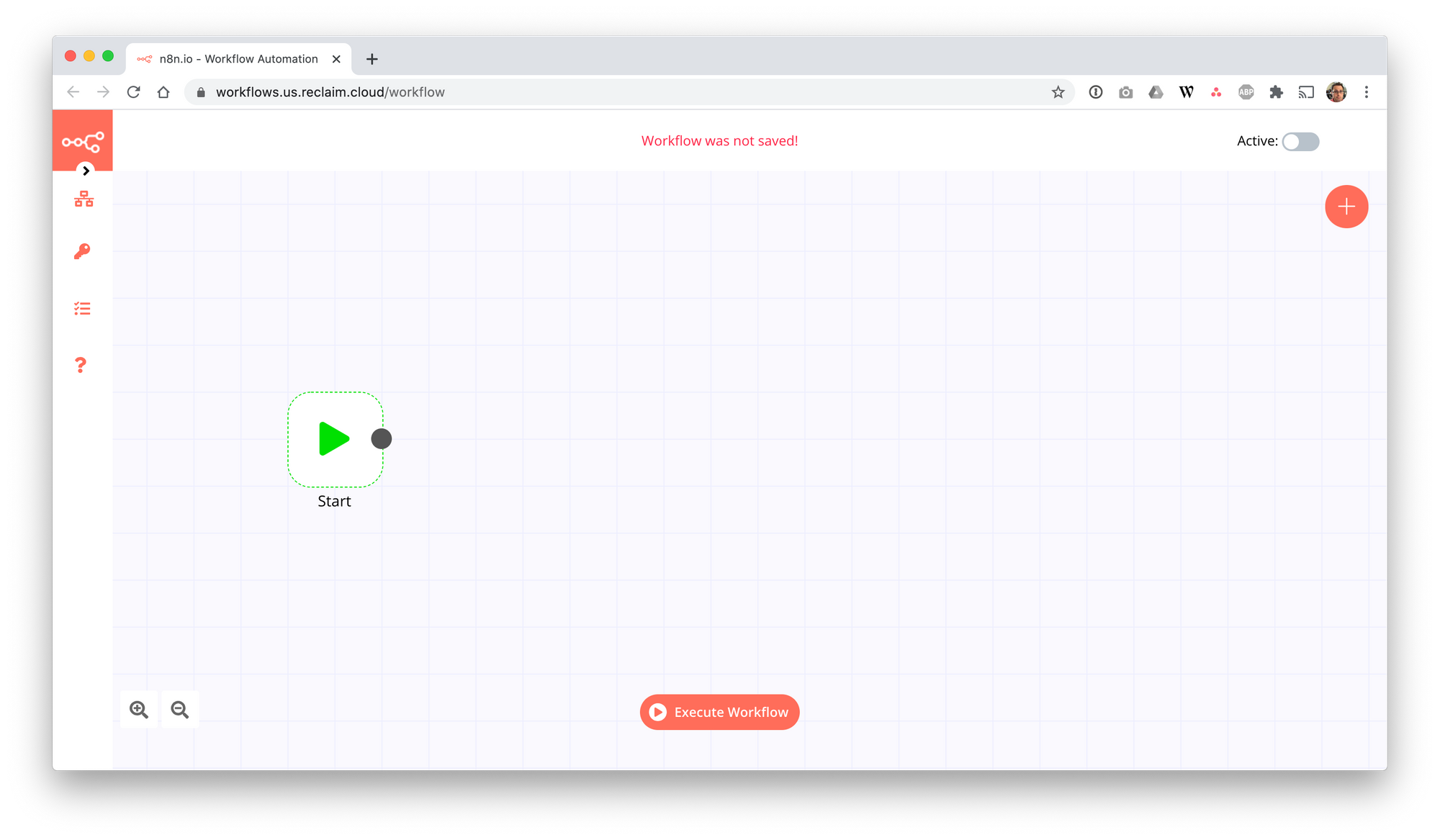

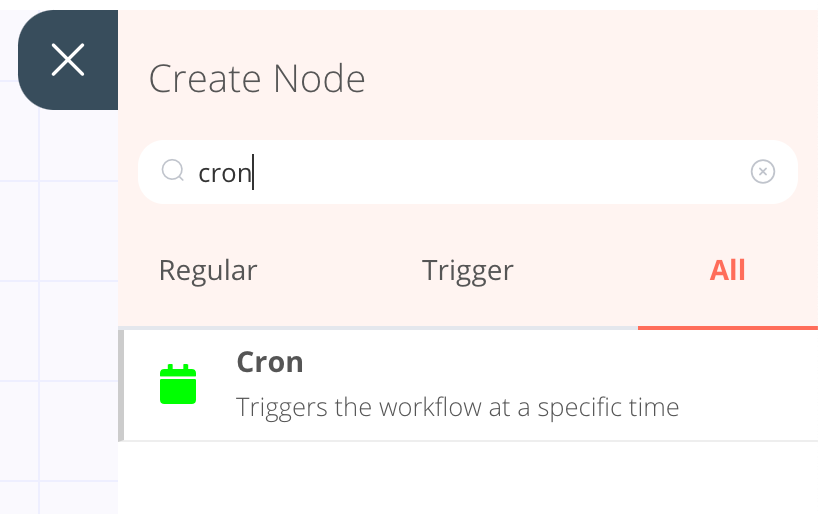

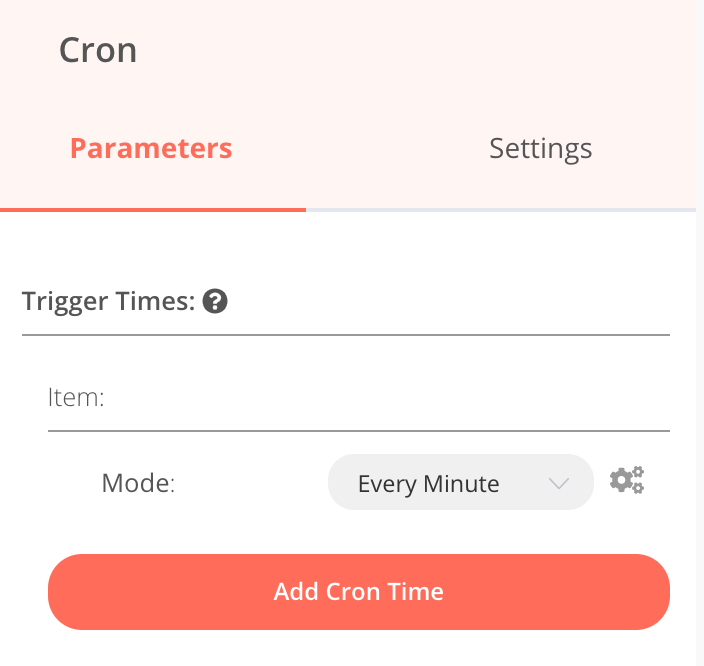

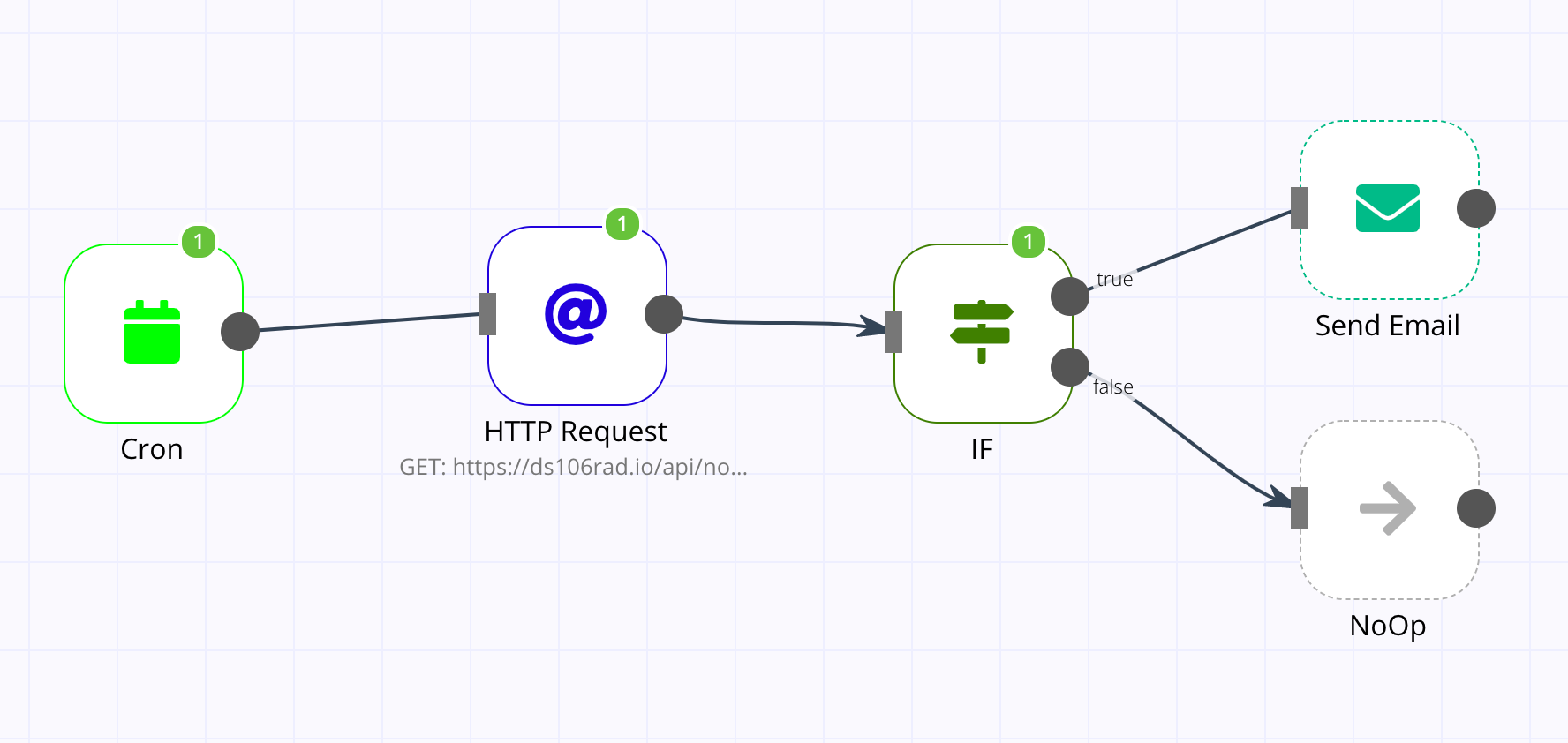

Success! Our app is up and running. The documentation is pretty good so let's see if we can setup a workflow and test it. It all starts with a trigger of some kind. I had the idea to grab the data from ds106radio's API at https://ds106rad.io/api/nowplaying and do a check if someone was live. There is a cron module that allows you to automate a task to run at particular intervals just like a typical cron job so we'll start there and have it run every minute to start our workflow.

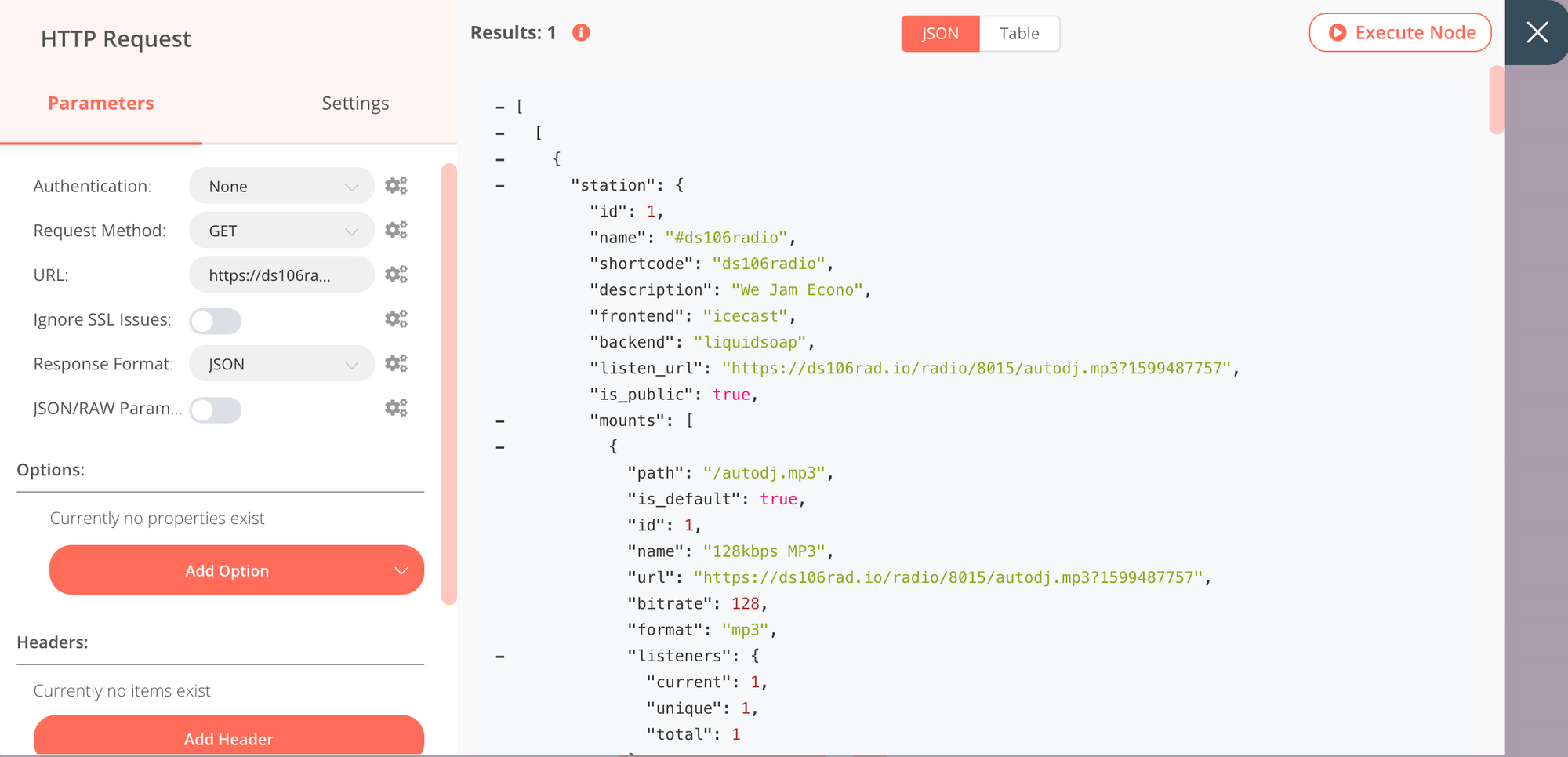

Now we need to grab the data from the API which is a simple GET request to the URL https://ds106rad.io/api/nowplaying. The n8n interface also let's you execute the HTTP request and ensure you are receiving the data properly.

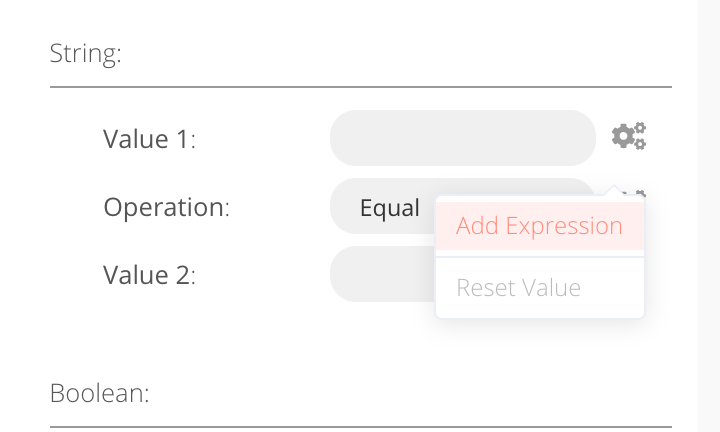

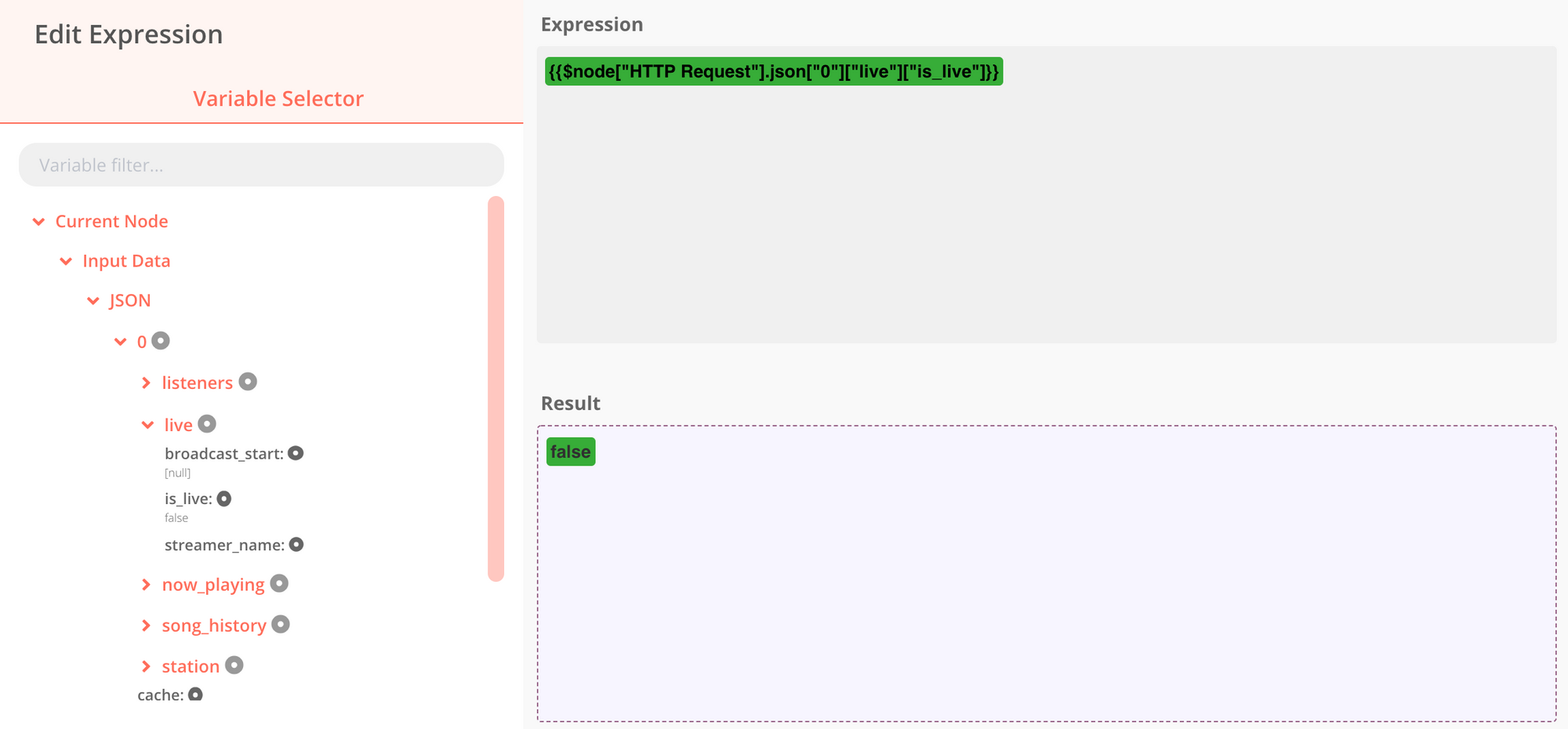

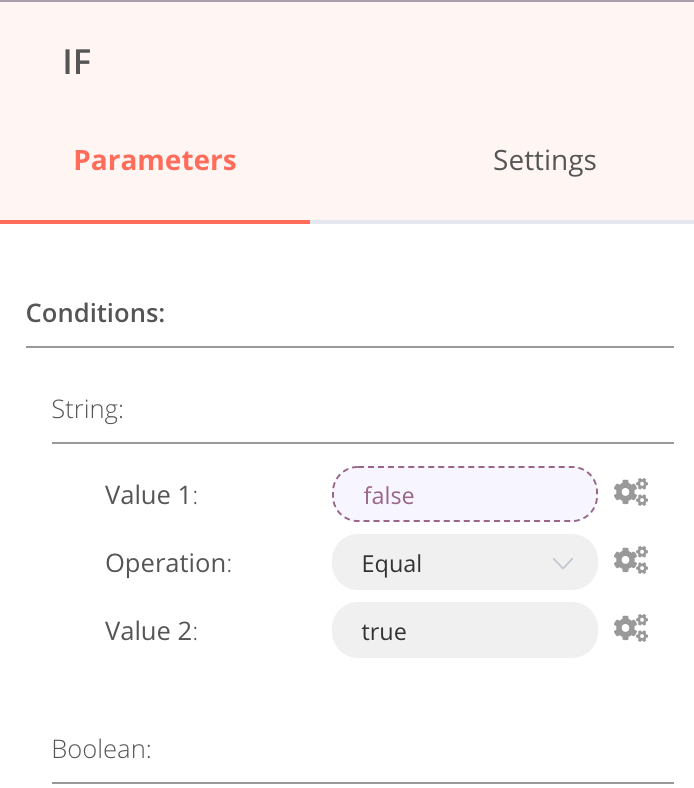

Now we need an action, but before we take any action we want to parse the data and see if someone is actually live because I don't want an email every minute, I want it to check and see if someone is live first. There's an If module that will help us with that.

The If module lets us setup a string comparison and even pulls in the data directly from the previous HTTP request so we can find what string from the API call we want to use as a comparison. In this case I grab the result of is_live and the If module will direct the workflow to True if the request ever returns that field as "true".

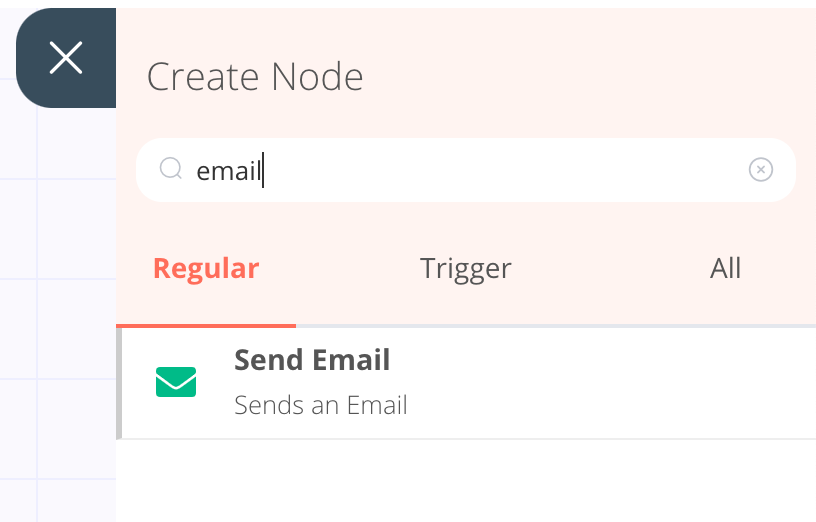

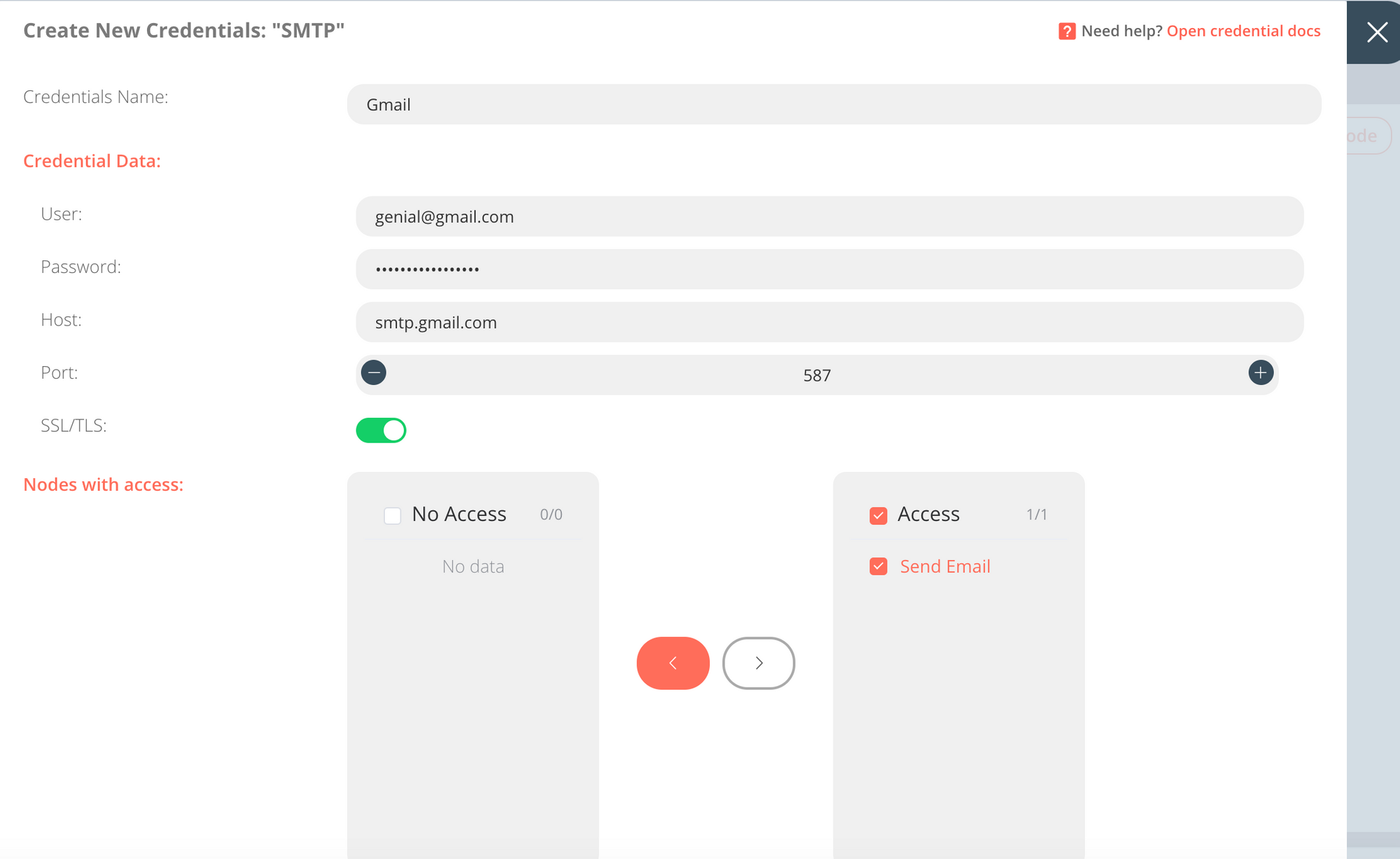

The final piece is sending an email. The email module requires SMTP credentials so I set those up using Gmail's SMTP option.

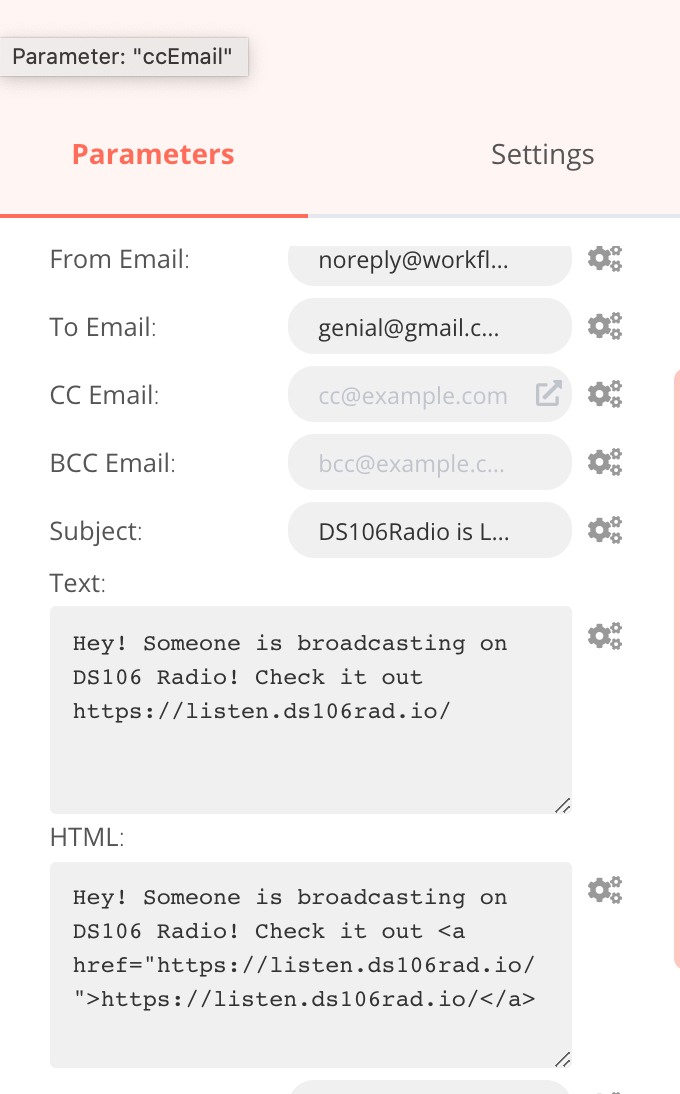

Now I'll be honest, in testing I never got an email from this module so I'll have to dig deeper into that, but what's cool is you could also use the Twilio action to get text messages, or write the data to a spreadsheet in Airtable. The actions you take on data are very wide-ranging. Here's how our workflow looks and you can see how you connect the various pieces.

I will definitely be using this app more. One piece I need to find out about is how to add authentication on top of the app because right now it is wide open so anyone going there would be able to see the workflows I've created (and even use my credentials). It looks like this guide would apply to get that setup which I'll be doing. Overall though not only am I happy to have a win on one of these blog post explorations, but this one is actually useful enough that I plan to keep it as a tool in my tool belt and hopefully you find it useful too!

Comments powered by Talkyard.