UMW Blogs in the Cloud

Anyone tasked with running a large WordPress Multisite install at an institutional level has likely dealt with their fair share of issues in the past few years from database scaling with large growth to the constant barrage of spam and login attempts that can DDOS and server and bring it to its knees. We've been through it all with UMW Blogs and had plenty of downtime along the way as we made changes left and right to play whack-a-mole with the moving target of taming this beast. Typically our load issues manifested themselves the greatest during the start of the semester when use would be at its peak with students getting new sites up and running. Summers and holidays tend to be much quieter and thus the server typically performs better. Over the years we've scaled that server which actually started out (not for terribly long) as a shared hosting account on Bluehost before spending many years at Cast Iron Coding in a dedicated environment and then for the past year and a half with a dedicated server through a state vendor. With each move we've attempted to grow the capacity both in terms of storage as well as raw computer power and by the summer we found ourselves with 8 cores, 16GB of RAM, 1TB of hard drive space, and still struggling with downtime in a period when issues were typically few and far between.

Alongside all this we were starting to have discussions with our Procurement office about the ability to pilot some work in Amazon Web Services. I had done plenty of experimentation in AWS in the past and we wanted to formalize that in the form of a more sustained pilot of the software for our use in the department and I was pleased that by the summer we had the green light to start experimenting as long as we kept the cost threshold below $2k (which we figured would be quite manageable with costs on the order of pennies per gigabyte). Ryan had been experimenting with different options for pushing backups to S3 storage and I was playing with different EC2 images to run various software installations. With UMW Blogs performing so poorly over the summer though I had real concerns about what we were in for this Fall and mentioned it in a meeting around early August. It was Ryan who had the idea that perhaps we could dip our toes into the water by migrating the database that UMW Blogs runs on to the AWS Relational Database Service. I thought it was a great idea since the actual migration of that data would be pretty straightforward and we had wanted to start breaking out the various services (Apache, MySQL, File content) and moving them to AWS eventually.

Moving a database to RDS is pretty straightforward if you've ever exported a database in the past. You're basically taking a SQL file of the contents of an existing database and writing it to a database on another server. It just so happens this "server" is a service running on AWS. Amazon provides you with credentials and a hostname so really it's just a matter of running a command like this:

mysql -h hostname.awsdomain.org -u username -p database_name < file.sql

Now I make that sound pretty straightforward but it should also be noted that UMW Blogs is 16 databases and comes to around 27GB of data in MySQL alone so a few short commands weren't exactly going to be a quick thing. We decided to schedule some downtime about a week before the start of classes to move it all over and make sure it worked. We figured 2 days would be pretty safe and setup a Friday and Saturday for it to be down thinking we'd shoot for being done by Friday evening and if not we could come back in on Saturday and finish up. The actual migration to RDS went off without a hitch, although it took ~6 hours for everything to transfer over. Still we figured we were going to finish up with plenty of time before the day was out Friday. But then we brought it back online and....it was slow. Possibly slower than before. Suddenly our dreams of the cloud were coming crashing down. AWS was supposed to be really fast, our dedicated server was no longer having to run MySQL, what was going on? After some looking around Ryan found a few mentions of people saying that RDS is really designed to work alongside EC2 instances and that running it as a remote MySQL host wasn't advised due to latency. So much for that!

So we could either scrap the whole project which was very disheartening considering we still were going to have the same load issues we had previously, or....what? I wasn't exactly sure what the alternative was but Ryan throws out the idea "Why don't we move UMW Blogs to EC2". I was highly skeptical. Moving a database seemed like no big deal, moving an entire WordPress install to cloud servers seemed monumental in comparison with so many unknowns. But he convinced me by pointing out we could make use of our existing dedicated server to keep storing the majority of the files (the blogs.dir directory of uploads from sites) so the move wouldn't be terribly difficult, basically get a WordPress multisite instance up and running, mount blogs.dir, and connect to the database we already had at RDS. Easy, right?

So we kept working and got a quick instance up and running before the end of the day Friday. Ryan planned to setup NFS between the instance and our dedicated server and we'd meet on Saturday to finalize it all. We hit a snag due to NFS ports being blocked by a hardware firewall with the server provider and had to switch to a different method by utilizing SSHFS which communicates over SSH to setup a file mount. Works just as well, if a bit buggy and not as widely supported. The port thing is actually something that came up pretty often in AWS as well since you have to be pretty particular about opening ports between various services. By default Amazon doesn't make anything available without a private keypair and SSH connection so you have to setup security groups to give the various services enough access to talk to each other. We rsync'd the contents of our WordPress directory to the EC2 server, mounted blogs.dir to its location, and we already had the RDS information in wp-config.php from the day before so we fired up Apache. We had quite a bit of trial and error with getting the mounts in the right location for the URL structure to be the same, and on top of that it's damn hard to setup a server from scratch. I quickly realized just how much I had been relying on cPanel/WHM for some of the basic stuff like vhosts in Apache and DNS configurations. Ryan runs his own VPS and was a bit more adept at those types of configurations but I think even he would admit that getting multisite to run properly as a subdomain install with domain mapping isn't exactly straightforward (and to top it off I'm a sucker for CentOS and had set that up as the distro and he's an Ubuntu person so everything was in different locations).

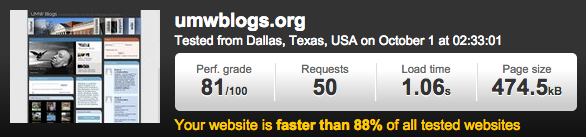

But you know what? We got it working. We had to push into that Sunday but by about noon it was up and running and I'll be damned if it wasn't worth it. For the first time in a long time it was fast. No, maybe you don't understand me. It was fucking fast.

We had just gotten used to waiting a few seconds with each click to a new page or dashboard area and now there was almost no delay. We had a few times where SSHFS would tank and take all the images with it and we'd have to remount the directory but we set it up to automount and it's been behaving ever since. We felt really good about the performance and even better as the semester began and we braced ourselves for the impact of new signups and the typically load of a Fall startup. And it never came. Zero downtime. Week one came and went. Week two. Week three. It just kept running and running really fast to boot. To add to that when all was said and done we were spending an estimated $300/month for the resources. Compare that to the $8k/year we were spending on the dedicated server that was continually falling down and had no capacity to scale up on the fly and you start to realize the potential in a system like this.

We didn't have any downtime until last week when started running into a different issue but I'll save that for a followup post where I'll talk about some of the differences running on a cloud infrastructure like AWS and some pitfalls we're quickly learning to account for and avoid. In particular with regards to the pricing model of all you can eat rice charged by the grain where it can be confusing to know where expenditures will fall (which I think is likely at least part of what our procurement office is struggling with). But for now UMW Blogs is singing a new song with regular backups, distributed storage, and a scalable infrastructure that will serve us well as a platform for years to come.

Comments powered by Talkyard.